Seeing how well an agentic AI coding tool can do compared to me using an actual real-world example

As you may or may not know, I am in the camp of those concerned about existential risk from AI (don’t know what that is? There are plenty of good explainers out there, from the more accessible to the more advanced). As part of that ongoing interest in not seeing me and everything I love melted down for raw materials, I try keep up with developments in AI capabilities. Recently, though it has little to do with that giant world-ending problem, I stumbled across a more mundane dispute that overlaps with my other interests:

In a sense, I think this Stokes guy is right. Capabilities are improving at tremendous speed, anyone like me expecting AI to get to the point where it can conquer the world (or, more optimistically, all jobs) should naturally expect to see it master software development sometime before then. But the point of contention here is whether current AI systems can even provide significant productivity gains to programmers working on meaningful, complex systems and… ha… haha… yeah, I’m very much on Blow’s side on that one.

But, y’know, anyone can just toss that opinion out there. Fortunately for me though, it doesn’t seem like many skilled programmers that hold the “current AI is incompetent” view have actually bothered to substantiate their point (at least, with an article like this). I feel like, when I see these sorts of disputes, a lot of people are missing context.Everyone agrees the AI isn’t perfect, but what exactly is the AI failing at and why does it seem to matter so much more to some people? As someone who has tried AI out in multiple contexts, I thought it would be valuable to give the “strongest” tools a fair shot and closely scrutinize and critique the actual outputs. Who knows, maybe I’ll get a benchmark named after me1.

The Task

The task is simple, and so are the rules:

I have recently added a simple camera shake feature to the game I’m working on. This was done without any AI assistance. Based on the edit history of the relevant files, this took me about 30 minutes.

I will branch off from just before I added the feature, then use an AI tool to recreate it. My goal will not be to necessarily perfectly replicate my implementation, but to prompt the AI and make edits until it’s version of the feature is basically functional. I will then critique the code it provided throughout this process.

If the AI tool and I cannot achieve basic functionality (from when I start typing the first prompt for the AI), I will critique what work it was able to do inside of 30 minutes.

Frankly, I’m throwing the AI a bit of a softball here. The problem is not too difficult, we’re not doing any major overhauls to the program’s structure, we’re not adding a feature that touches multiple systems, and most of the structure is already in place, no need to add any entirely new systems. This is partly to keep this test easy to run, partly to keep things short and understandable for beginners, and partly as an additional sneaky test for the AI: can it recognize that there’s no need to overcomplicate things?

My implementation

Before we give the AI a shot, let’s take a look at my existing implementation to get an idea of where our standards are at.

As mentioned, the goal was to create a camera shake system. Specifically, I wanted a function I could call which:

Takes a duration (in frames) and a force (in pixels) and shakes the camera that much, for that long.

Calling the function multiple times should create an additive effect, so multiple sources of camera shake can add up to create a stronger overall effect.

I’d also like the ability to limit the shake to only the x or y axis.

Before I started, the camera was mainly controlled by the stage system, and its final position primarily depended on three global vector2 variables:

stage.target_camera_pos: Primarily meant to be messed around with by entities in the stage. After setting this value, the camera’s center position will (later in the frame, when the camera updates) be moved to this value. At the moment, the camera will always snap instantly to this position, but in the future I may have it move more smoothly/gradually towards it.

stage.camera_pos: The camera’s actual position in the stage. If you imagine the camera’s view as a rectangle, this position would be in the top left.

camera.pos: When the game draws anything, this value is applied such that the camera system works as intended. This value is extremely volatile and is mainly intended to be a cached value that can be quickly accessed and used in drawing functions without needing to cast or modify the value. When changing drawing contexts (such as when drawing to a separate texture), a new camera position is pushed onto a stack, and is popped off said stack when returning to the previous drawing context. Whenever the stack changes, this value is updated to reflect the top value of the stack. Also corresponds to the camera’s top-left position. stage.camera_pos gets pushed onto the base of the stack when the current stage begins rendering.

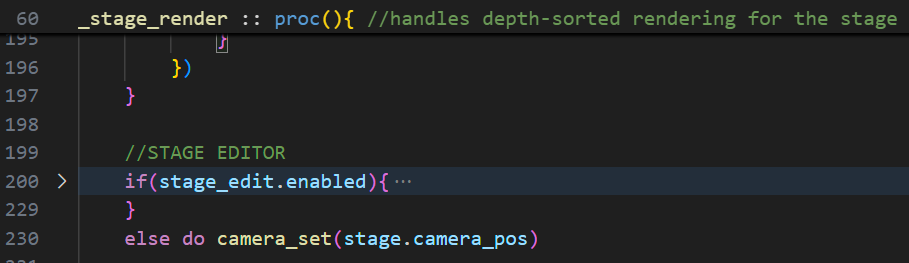

Here’s what the stage system update (which was basically just camera code) looked like:

In summary:

If the camera is tracking a specific entity, set the target position accordingly.

Set stage.camera_pos based on stage.target_camera_pos

Clamp stage.camera_pos to the stage bounds.

camera.pos is then set via the provided function in the stage rendering code, right before it starts drawing stuff:

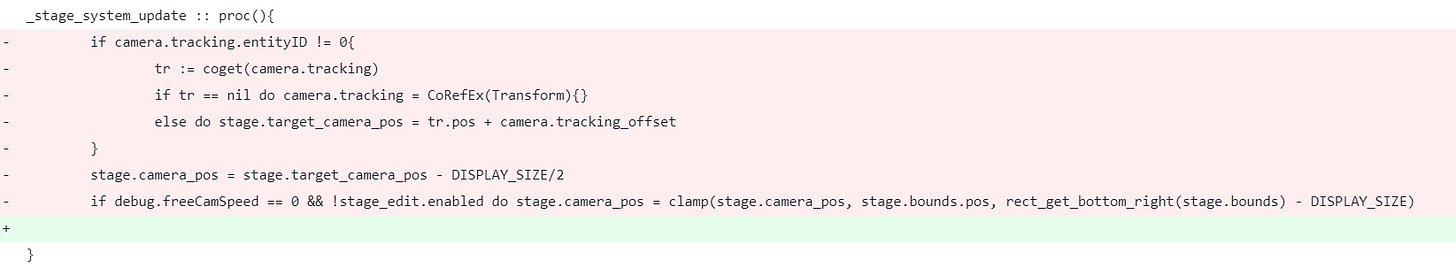

Alright, with all that preamble out of the way, let’s look at the changes made. Since I was finally adding per-frame camera-related behavior that arguably wasn’t also closely tied to the stage system, I first decided to move all the camera code in the stage system to a camera-specific update function:

That left _stage_system_update empty, but I decided to keep it around in case I want to add anything non-camera related to it later.

The simplest form of camera shake essentially just involves adding some random value to the camera’s “unshaken” position every frame. With that in mind, and since _camera_system_update is intended to be the “end point” for any camera changes in a frame (i.e. any special camera behaviors I add in the future will either be called inside the function or before it), I decided to implement camera shake by modifying stage.camera_pos after we’ve set it in _camera_system_update. Since I wanted the camera to be able to shake slightly outside the room bounds, I decided to add the shaking code after we clamp stage.camera_pos.

But I still needed some values to refer to in order to determine how much shaking to apply. Since we want shaking to be able come from different sources, which could shake things for different lengths of time and with different strength, I decided the best way to proceed was to have a pool of shake “requests” that can be added to or removed from2.

Each shake request stores a how long it should keep on going for, as well as a force value. The force is stored as a vector2, allowing me to apply different amounts to the x and y axis.

Exposing this to the “user” (me) in the form of the originally specified function is trivial:

With all that out of the way, applying the actual shake is simple:

Loop through all the requests, adding up their force, updating their timers and removing them when they run out. The order of the requests doesn’t matter, so we can use an O(1) time unordered remove.

Once you have the total force, for both the x and y axis:

Clamp the force to avoid edge cases where its unnoticeable or overwhelming

Apply some randomness to make the camera shake feel more varied

Add or subtract (at random) the force value to the camera position

And voila! Now all that was left to do was test and see if it works. The easiest thing to do was just to throw something at the top of the player’s update loop, so that’s what I did:

Waow!

Here are some combined calls. With this code, the camera shake should increase significantly for 10 frames (1/6th of a second) whenever I click (I also made Pro flinch for effect):

Here’s vertical shake only:

Tubular!

And all in just about 30 changed lines!

Now let’s see how the AI handles it.

The AI’s implementation

While I have tried Claude Code in the past, for maximum fairness, I wrote the previous sections before running this test, and won’t be making any major edits to them after conducting the test.

You’ve been able to ask AI chatbots to spit out code for you for quite a while now, but more recently firms have begun offering “agentic” AI coding tools which can take a look at entire codebases and intelligently make changes. I’m not interested in comparing a bunch of different tools, so let’s just go with the best, which right now is…

Setting things up wasn’t too tough, now let’s jump right in. You’ve already seen the initial prompt earlier:

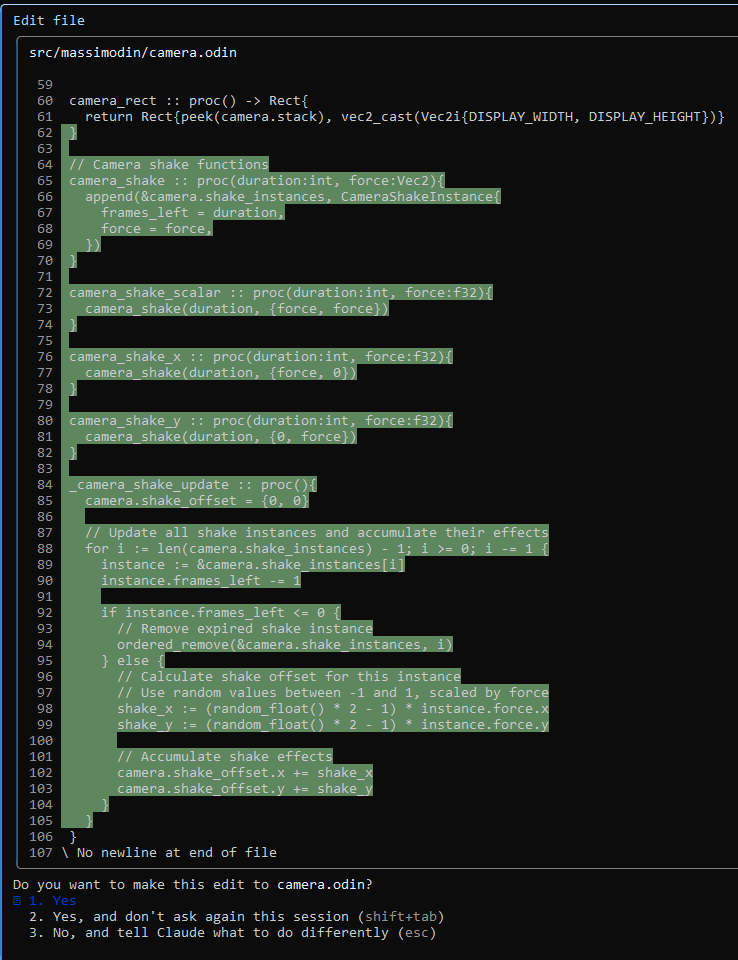

And here’s the first edit it came up with:

Impressive! The core idea is there, with only a little overcomplication compared to my implementation. Let’s see if we can correct that:

Nice.

It also remembers to init the data structure:

Here’s the main implementation:

Not bad. Here are the issues though:

The extra camera_shake functions are a nice touch, but given that Odin makes it easy to provide a Vec2 or a scalar to camera_shake, ultimately unnecessary.

It’s keeping a separate global variable for the shake offset. I didn’t catch that earlier; as you know, it’s unnecessary.

It doesn’t bother using odin’s special loop syntax, making the loop definition a teensy bit harder to parse than it needs to be.

It uses an ordered_remove to get rid of expired “shake instances”, wasting time unnecessarily. Surprising, since it’s still looping through the instances in reverse.

It correctly applies a bit of randomness to the shake, but this is done in an overly wordy way. It also gets the random function’s name wrong (should be random_f). The exact way randomness is applied is also not quite the same as what I’m doing, but that’s not super important and it does result in less calls to the RNG for now. Since this is something I plan to refine later even in my own implementation, I’ll leave it like this for now.

It doesn’t clamp the shake, but given that I never asked it to do this I’ll give it a pass for now and come back to that later.

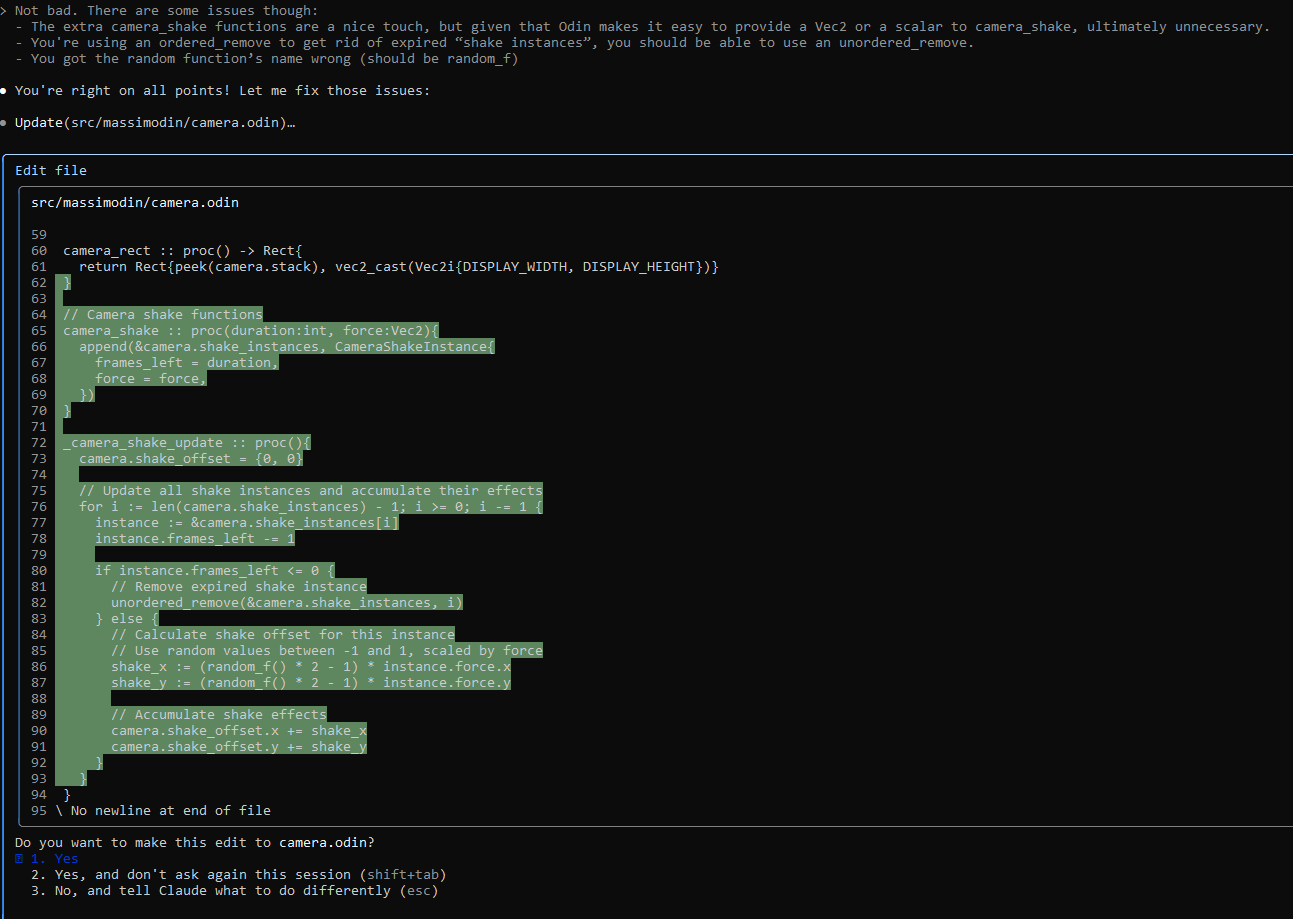

Let’s give it feedback on the major problems and see where it goes with it:

Still a bit more verbose than I’d like, but let’s move on.

Its plan is revealed! This comes before the clamp though, so let’s fix that, as well as the unnecessary global while we’re at it:

Cool.

It then tries to see if the code compiles, but I tell it that I have my own build pipeline and it should just add some test code and let me run it:

Good enough. Testing in-game, it all builds and works fine (Claude has a strange idea of “gentle”, but whatever).

Oh, let’s not forget:

Wow, that’s a bit much. Whatever though, also good enough.

How’d it do?

Frankly, pretty good! Claude was definitely off the mark at times, but with some scrutiny and feedback, it managed to get pretty close to our “ideal” implementation. Sure, its syntax is a little more verbose than necessary, and its architecture is a little less clean (still pretty well segmented though, nothing I’d consider egregiously wrong). Still, verbosity is a real cost, Claude sometimes taking a dozen lines to express what I can cleanly express in 1-2 can add up to serious problems in terms of readability and maintainability. I can always go clean it up, but man, that’s gonna add more time, let’s check and see how long Claude and I were at it-

Oh. 50 minutes, huh? So much for 5x productivity gains…

In the end, I think this test isn’t going to satisfy either side of the debate very much. Those who believe in the current power of the tools are gonna feel like I spent too long studying and nitpicking every last detail of the AI’s implementation, squandering any productivity gains. The skeptics, on the other hand, will likely see this task as trivial, and not really proving anything about serious capabilities3. I think a more serious version of this would involve running the same process on the implementation of a large and complex system that took multiple days to complete and touched many different parts of the codebase (and didn’t have as straightforward of an implementation strategy). But that would be far more difficult to fit into a short, accessible post like this one, and I have better things to do4!!

Still, for any confused onlookers willing to really study the details shown here (I do it all for you, my lovelies), I think this exercise will offer you some insight into the current power of these tools. At least until the next big capabilities leap in 6 months completely obsoletes this post.

Or more likely just get a bunch of people yelling at me that I’m not using the AI right.

Since this is a dynamic memory structure, it needs to be initialized alongside the camera system. It exists for the lifetime of the program and we never need to worry about freeing it though, so we can just use the default allocator. “init” here is just a proc group I made for initializing data structures in general, it contains a proc that simply calls the built-in make_dynamic_array.

And also, the fact I had already completed the task myself and knew exactly what to ask for is certainly giving the model a leg-up.

But maybe I’ll do a part 2 the next time I use AI to help me build something from scratch.